I don’t like CoffeeScript.

I’m sorry, I know I just offended half of the JavaScript community, but I have to get that off my chest. I feel like it adds an unnecessary abstraction and needless syntactic sugar over top of a language that is already pretty expressive and easy to understand. To me, it smacks of laziness and a sense of entitlement. ‘Ugh, I just can’t be bothered to write semicolons and curly brackets. Who has the time?'

Shortly after my initial displeasure at learning of CoffeeScript, I learned about ClojureScript and I went ballistic. It’s ironic because I’m a huge Clojure fan and an even bigger fan of JavaScript; I just didn’t understand its necessity at the time. Again it had an air of hipster malaise: ’Not only do I refuse to put semicolons and curly brackets in my JavaScript code, but I refuse to put JavaScript code in my JavaScript code. I want to write Lisp! Let’s go grow an ironic beard’.

My knee-jerk reaction to these new languages was visceral. If you want to use fat arrows and you want your indentations to have semantic meaning, then use Haskell. If you want to write in a Lisp dialect then fire up a JVM and write Clojure. If you have a problem designing something in JavaScript, learn how to solve it…in JavaScript! And get off my lawn, hipsters!

I felt like the community was way off-base and developers were wasting their time on languages and approaches that served no real purpose.

Until I realized I was the one who was way off-base.

I used to think that CoffeeScript, ClojureScript, and tools like CSS preprocessors suffered from what I dubbed the ALOHA Syndrome - Another Level of Horrible Abstraction. It was a result of programmers with too much time on their hands just trying to be clever. But then, a colleague at a client where I was working posed an interesting question to me: ‘OK, then by that train of thought, should we all be writing in Assembly?’. Hmmmm….

Compile All The Things

I submit to you that JavaScript IS the new Assembly language. It is the universal language of the browser that serves as a target language to which source code can be compiled. Developers can choose to write the language themselves (a much easier task than writing actual assembly) or they can pick a language of their choice that expresses their problem space and has the semantics that enable them to approach a solution.

This movement is evident in the fact that everyday there are tons of languages sprouting up that all cross-compile to JavaScript. In fact, the CoffeeScript wiki on Github has a whole list of them:

https://github.com/jashkenas/coffeescript/wiki/list-of-languages-that-compile-to-js

It’s no secret to any programmer with a pulse that JavaScript is the new hotness. The recent explosion of development and community activity is incredible. The web is the new VM, the browser is the new IDE. The ability to compile to JavaScript is huge and opens the doors to a whole host of possibilities. Consider that some of the features that CoffeeScript provides are making their way into the ECMAScript 6 specification. Items like classes, destructuring, splats, and fat arrow syntax are all matriculating into ES6. Would any of these features have been added to the spec if CoffeeScript hadn’t come along and nudged the community forward? Perhaps, but when a new language is implemented that patches some ugly holes in your language and said language catches fire, that is going to make the standards bodies sit up and take notice. Competition is good and having alternatives that provide different methods for accomplishing tasks will only serve to better the ecosystem.

Linguistic Relativity

Another benefit of this whole JavaScript-as-Assembly movement is the idea of linguistic relativity. On a JavaScript Jabber podcast in 2012, the topic was CoffeeScript with its creator Jeremy Ashkenas. During the podcast, the idea of linguistic relativity arose, which is the concept that you find different ways to express yourself based on the syntax of the language. It is this core idea that actually explains why all of these languages compiling to JavaScript is a good thing for finding new ways to solve problems.

Take ClojureScript for example, which is essentially a compiler that compiles Clojure code into JavaScript. One of Clojure’s main features is the idea of immutability, which is absolutely not a main feature of JavaScript. Therefore, a programmer who knows Clojure well and would like to structure a web application around immutable data structures now has an option. Rather than writing insanely verbose and defensive JavaScript by hand, they can write Clojure, relying on its internals to manage immutability and then compile all of that to JavaScript. As a result, a whole host of frameworks targeting the front-end and written in Clojure have sprung up, like Domina, which is a DOM manipulation library like jQuery and Om which is an interface to Facebook’s React library built on ClojureScript.

Another example along the lines of linguistic relativity is the Dart language. Dart provides a few things that its creators felt lacked in JavaScript and as a result, hindered developers from writing large-scale applications. So, they created Dart and added features such as type safety, true object-oriented inheritance, and the ordering of files when loading dependencies. Basically Dart seeks to take a more structured approach to writing JavaScript than even what ES6 will eventually provide.

Further, Dart’s Wikipedia page says that Google hopes Dart to become the ‘lingua franca’ of the open web. That’s a pretty bold statement and in all reality is probably a pipe dream on Google’s part. The better path for Dart seems to be to trailblaze the idea of optimized JavaScript engines or VMs in the browser. In order for Dart to run in a browser currently, it either has to be compiled down to JavaScript or run inside a Dart VM. The only browser right now that ships with a Dart VM is Dartium, which is a Chromium offshoot provided by Google. It sounds very closed-source and walled-garden but when you stop to think about it, the concept is intriguing:

A separate VM running in the browser allows for optimizations that just aren’t possible with JavaScript VMs such as V8. Because of the dynamic nature of the language, certain assumptions are prohibited inside JavaScript engines which leads to performance problems that just can’t be overcome. For example, because the structure of objects can change at runtime, there is no way for the engine to know what the properties are going to be at any given time, so as a result, optimizations can only be taken so far. Granted, V8 and it’s ilk like Spidermonkey, are still pretty performant, but the fact remains that JavaScript itself just can’t be completely tamed.

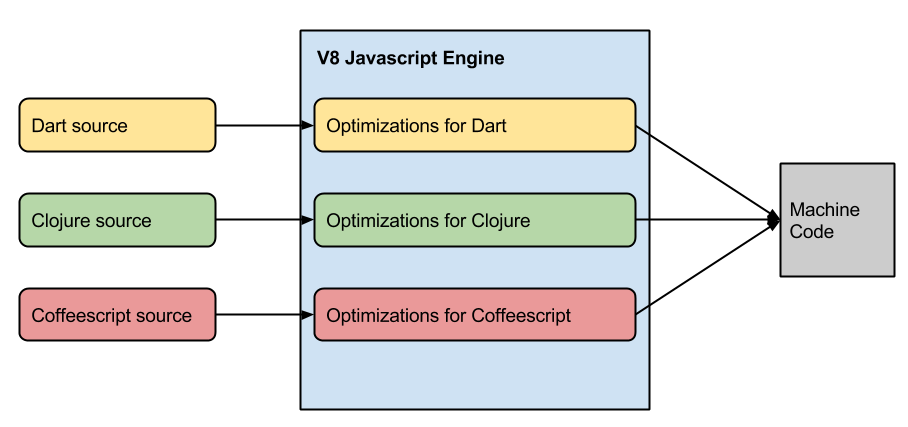

This is basically what the Dart VM is. But consider a JavaScript VM that COULD optimize for those things because it knows the language that the JavaScript source was compiled from. Take V8 and the Dart VM as an example. V8 itself cannot optimize objects as well as it could because of their ability to change at runtime. But, what if they couldn’t change? And what if the engine knew that ahead of time? There could be segment of the engine that made assumptions based on that and generated even more performant machine code:

Granted, this could get unwieldy (even near impossible) as the number of languages compiling to JavaScript grows, but its interesting food for thought. If the engine can make assumptions based on how the JavaScript was compiled, it can boldly go where no optimization has gone before.

Finally, and most currently, the Angular team at Google announced the formation of a new syntax they are using to write Angular 2.0 called ‘AtScript’.

https://docs.google.com/document/d/11YUzC-1d0V1-Q3V0fQ7KSit97HnZoKVygDxpWzEYW0U/edit#

AtScript is not a new language according to the Angular team (although I’m struggling to believe them). It takes the basics of ES6 plus TypeScript, which is a superset of JavaScript developed by Microsoft and using some syntactic sugar, allows the developer to infuse such things as annotations, types, and introspection into the JavaScript language. It then uses the Traceur compiler to transpile its syntax down to ES6 code. For example, AtScript will allow the developer to use annotation syntaxes familiar to Java, Dart, and TypeScript. These annotations will provide such things as type inference, field inference, generics and metadata information to JavaScript. Most importantly in my opinion, however, it will allow for type introspection which is the ability to examine an object’s types at runtime.

All of this sounds suspiciously similar to Dart, but the explanation given at the above link is that Dart is semantically different from JavaScript and the correlation is too difficult to maintain. Also, and this is a point not mentioned, Dart’s adoption is nowhere near that of JavaScript. So, it wouldn’t make sense to use it. Instead, the goal of AtScript is to simply use the familiarity of JavaScript while adding some much needed type safety to its features.

As mentioned, Angular 2.0 will be written in AtScript, which is a pretty huge deal and could result in a few different outcomes:

- It could further push the JavaScript community to add more of the features that AtScript provides to the ECMAScript spec (the Coffeescript effect)

- It could open doors for better optimizations in JavaScript engines since certain, previously unknown properties about objects can now be understood.

- It could create a whole new standard or specification.

Angular is arguably the most popular JavaScript framework around and the announcement that it will be using a superset of Typescript (which is already a superset of JavaScript) to construct its internals is big news. It only validates the trend that JavaScript is quickly turning into a target language.

Debugging

One question you may be wondering is how on earth would you debug this nonsense? The output of compilers is just a bunch of hieroglyphics. So if I’m running a web app that’s running the result of cross-compiled JavaScript and I receive some crazy error deep in the bowels of the output, how will I know what source that coincides with? The answer is source maps.

Source maps provide a way to cross-reference your compiled JavaScript code with the actual source that produced it. The concept has been in use for a while with minified JavaScript and it’s approach can be used with respect to all the other source-to-JavaScript cousins. The inherent problem with this though is that some code just isn’t a one-to-one relationship. Clojure is an extremely succinct language. So you might write 4 lines of Clojure code that generates 50 lines of JavaScript code. If line 36 throws an undefined error, how will you pair that back up to the source? In the aforementioned JavaScript Jabber podcast with Jeremy Ashkenas, he mentions that readable compiled JavaScript code is arguably more important for this reason. The problem with THAT is that generating readable compiled JavaScript code is just not always feasible. The further that the language is from JavaScript, the more difficult it becomes.

But, the possibilities are endless. Once I realized what actually was happening around this ecosystem of languages that were compiling to JavaScript, I began to see that a sea change was underway. In much the same way that developers created abstractions over top of assembly to generate code that ran on hardware, we are entering into a world where we create abstractions that run inside the browser.

So, I’ve learned to embrace it and even champion it. I still don’t think that all of the syntactic sugar that Coffeescript provides is needed, but some of it is extremely valid, which is evident by the migration of those features into the ECMAScript specification. What makes this all even more incredible is that JavaScript itself is pretty easy to write and becoming ever more ubiquitous. So the more languages that crop up that compile to it will only seek to drive the language forward and make it more powerful. And in that instance, everybody wins.

Well everybody except the hipsters.