There is a lot of talk these days about 100% test coverage and if we should strive to reach it. I honestly think that 100% test coverage is a hot topic because it can be easily measured.

To me the most important question is how much test coverage is enough.

I would like to discuss test coverage by making an analogy to exercise.

We all know that exercising, like testing, is a good thing. We also know that there is a range that dictates the minimum and maximum amount of exercise that will be beneficial. Too little exercise and nothing happens, too much and it can hurt and even cause permanent damage.

How much exercise is enough? Will I be healthy if I start running some miles every week? Probably yes. Would I be even healthier if I (try) to run 1000 miles every week? Most likely not.

It is the same thing with testing. Can I definitely say that a product with 60% test coverage is “healthier” than a product with 1%? Not definitely, but plausibly yes, to the point that I can bet on it, and I’m not a gambler. How about a product with 40% and one with 50% test coverage? From a “healthy” perspective it is hard to assume that one is better than the other by looking only at the coverage. (A theme for another post would be to discuss if High Test Coverage is a cause or consequence of the fitness of a product).

It is easy to agree that a product with 0% test coverage should strive to reach, let’s say 10%. A lot of people will agree that moving from 10% to 20% is also feasible. But when do you stop?

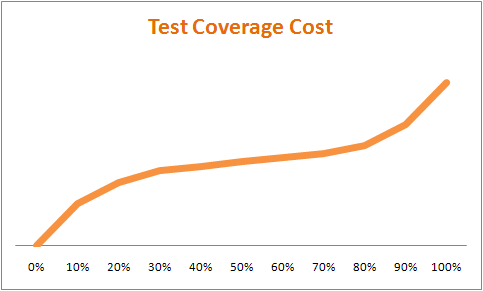

So the question now is about Return on Investment. How much “healthier” does the product get for each test invested? Although I don’t have statistical data, my experience tells me that the cost is not linear. There will be an initial upfront cost (actually investment) to setup the framework for testing. Some projects will be easier other more complicated, depending of the nature of the code base, types of integration, developers skill, etc. After this initial bump the cost is almost linear and most methods will have the same cost to be tested. In most cases teams will postpone some methods due to the difficulty to test them. Maybe the method needs to connect to a third party system, maybe the test cannot be repeated or other reasons. These are the tests responsible for the increase in the cost of coverage. To reach 100% test coverage an extra amount of resources will have to be invested.

The same way having an active life is a good way to increase our overall health, having an active testing life is a good way to improve our product "testability" and coverage. TDD is a well known technique that can definitely improve the usability of the API, reduce complexity and naturally improve test coverage.

But most importantly, define what the goals of high code coverage are for your organization, and create ways to measure it. If you don’t know what you’re trying to achieve with high coverage, how can you tell your “workout” is working? What do you want to accomplish by increasing the test coverage?

- reduced number of bugs open after each release?

- reduced number of time spent detecting and fixing bugs?

- increased efficiency of the team (delivering more with less)?

There are different ways to achieve the same goals, and from the business perspective the best one is the least expensive one.

It is well known that the earlier a bug is found the cheaper it is to fix it. High test coverage is one of the best ways to detect bugs earlier on and to keep them at bay, but is not one size fits all.

Sometimes there are bigger threats to a project that requires investing the limited amount of time and resources in different initiatives.

Every test created is a test that eventually will require maintenance. Therefore it is important to choose what to test wisely.

It is not because your test coverage can be measured that it must be chase the 100% mark, but the reality is that testing is like exercising, most of us actually need to do more.

Feel free to use the comments section to share your thoughts on this topic.